Deep Dive Into / Internals of

Kafka Streams

Kafka Streams 2.2.0

@jaceklaskowski / StackOverflow / GitHub

The "Internals" Books: Kafka Streams / Apache Kafka

Jacek is best known by the online "Internals" books:

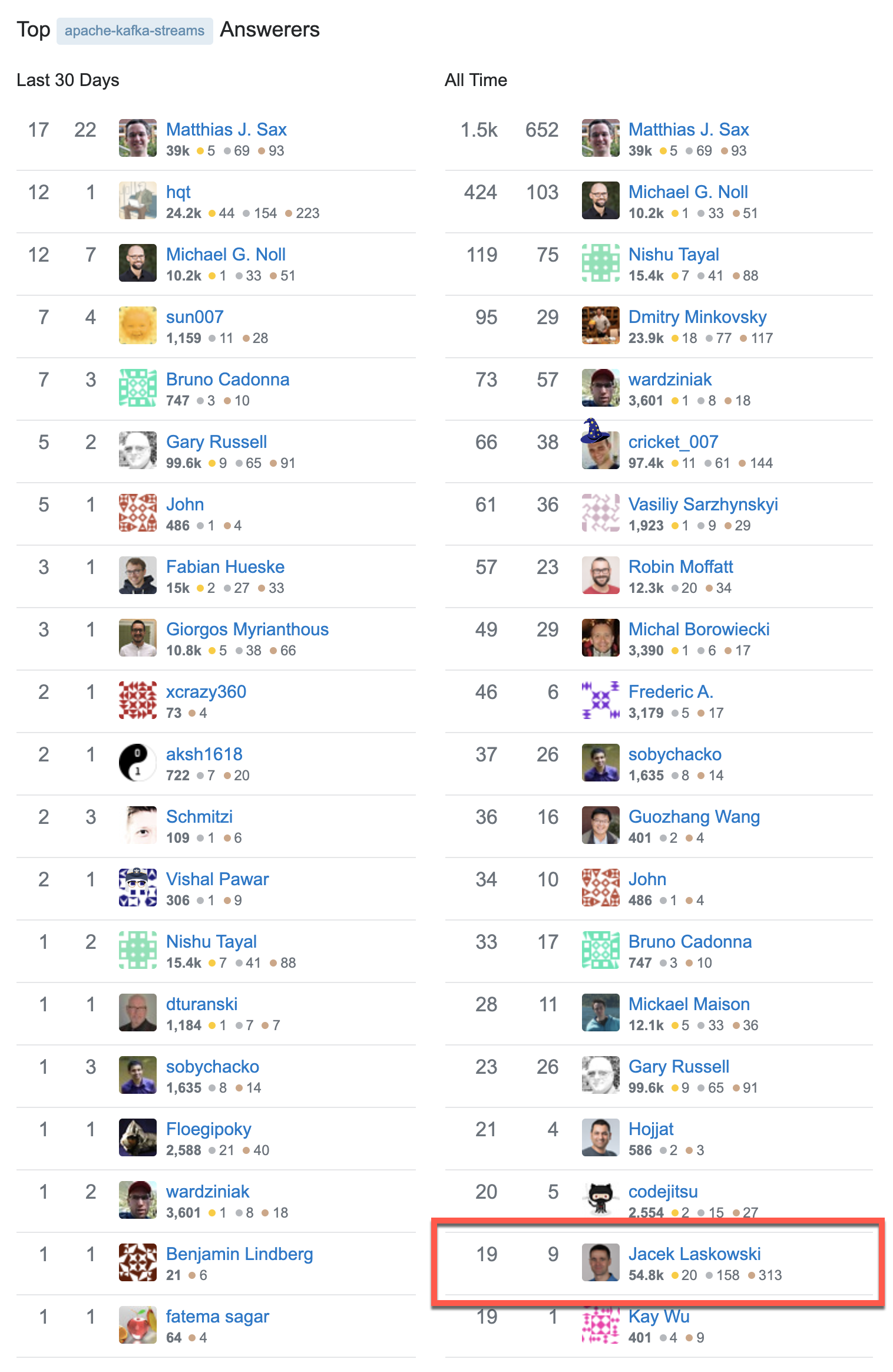

Jacek is "active" on StackOverflow 🥳

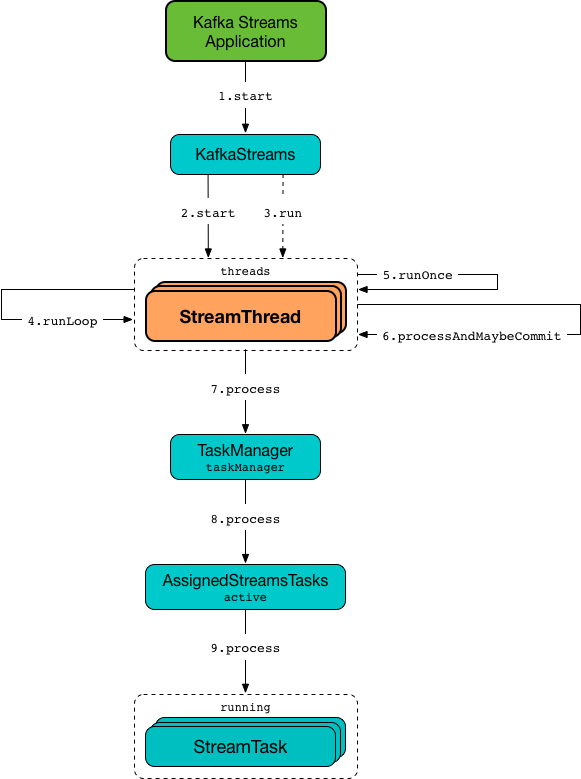

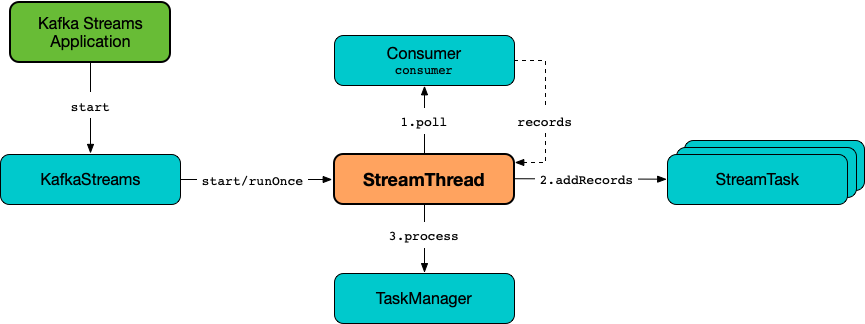

StreamThread (2 of 4)

StreamThread (3 of 4)

StreamThread (4 of 4)

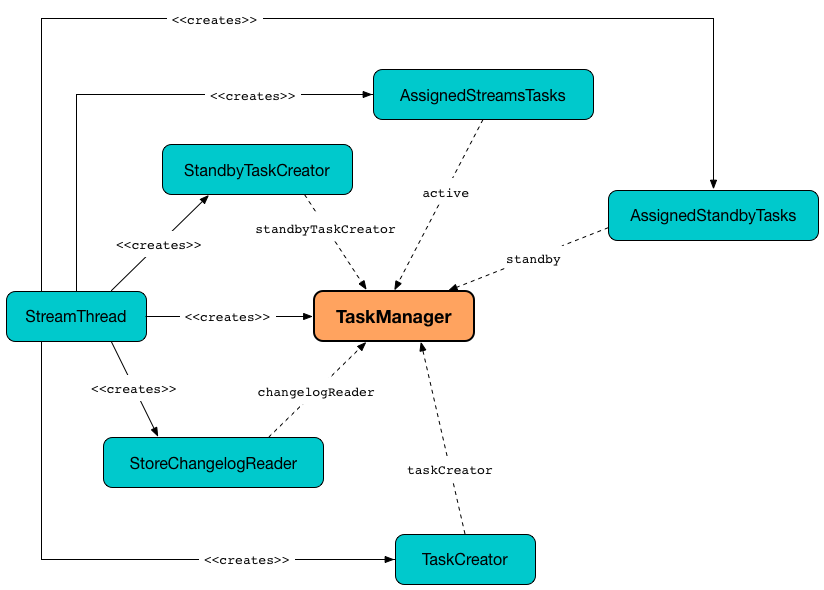

TaskManager (2 of 2)

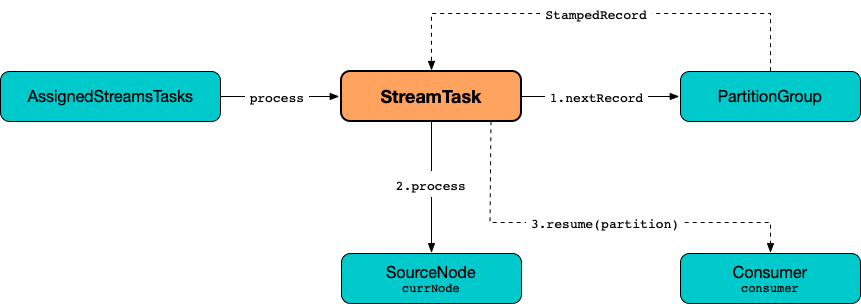

Stream Processor Tasks (2 of 3)

Stream Processor Tasks (3 of 3)

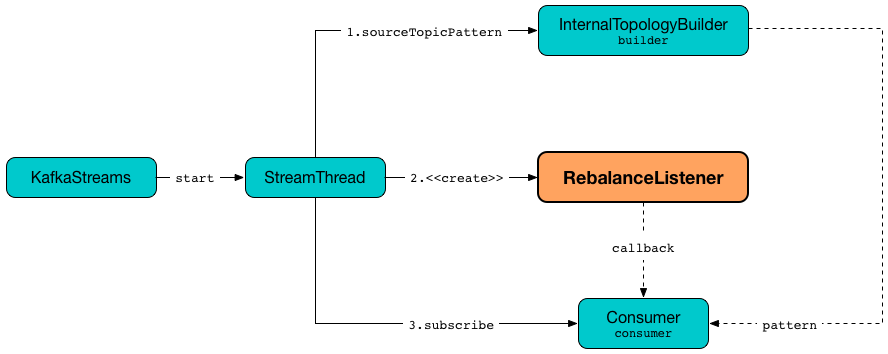

RebalanceListener (2 of 2)